I'm participating in the 3Blue1Brown Summer of Math Exposition (SoME). It's essentially an online festival of explaining anything mathematical. Brilliant.org stepped in with prize money. This rather formalized the exposition as a contest for the best entries as judged by the organizers and peer reviewers. The entrants themselves are the population of peer reviewers using a novel voting system implemented in Python called, Gavel.

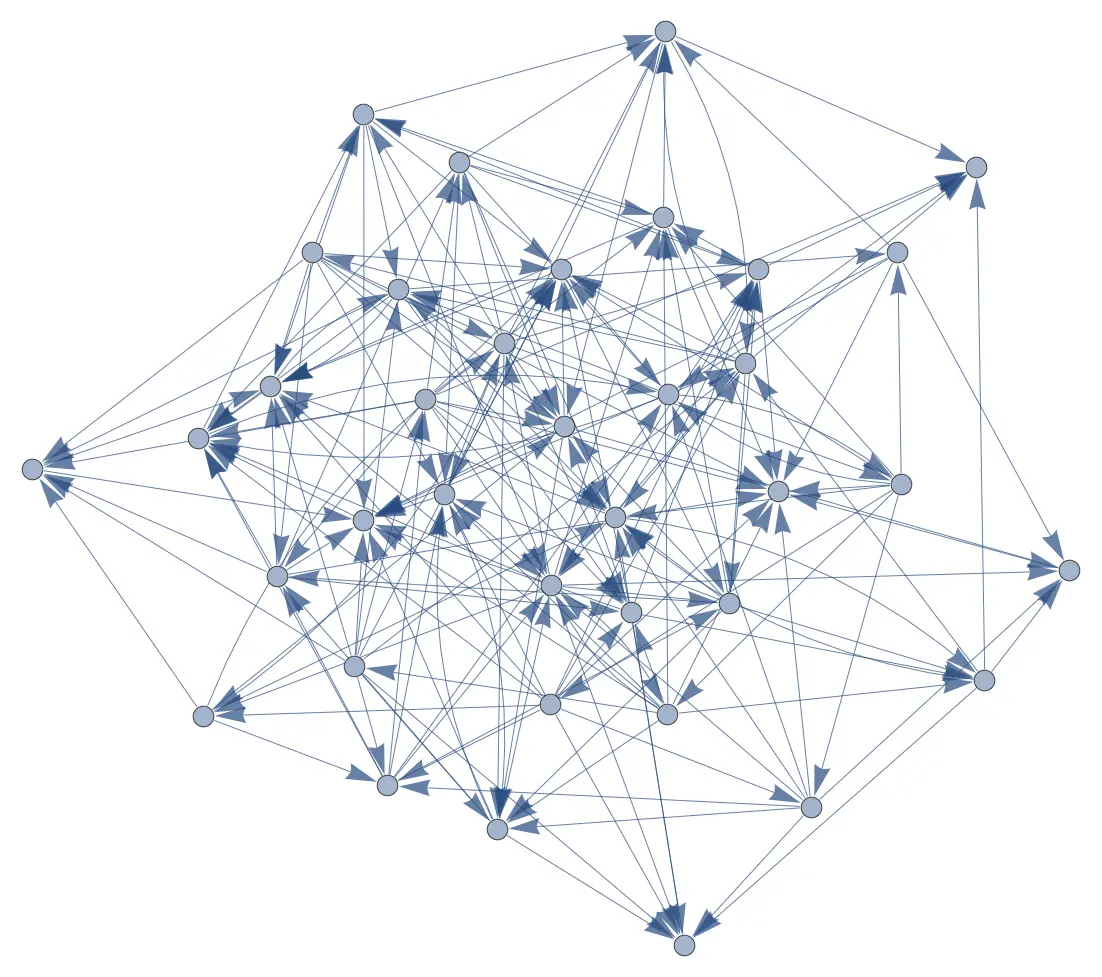

Gavel implements an idea that I'm calling a "Relativistic Judgement" system. A participant is shown two entries and must judge one as better than the other based on a set of criteria. Then a third entry is shown and the participant must choose between the most recently viewed (number 2) entry and the new (number 3) entry. This growing chain of review pairs continues until the reviewer's patience runs out or the reviewer drops from exhaustion.

At the time of this posting, the reviewing process is in progress. The end result of the effort is yet unknown. I'll post an update next month when the process completes.

This is whole system of relative judgment is quite fascinating. Over my career, I witnessed and/or participated in Mozilla skunkwork projects that had a similar form: a novel newly published mathematical method of drawing conclusions from a data set and a self selected population that produces the data. At Mozilla, it was all "Likes" and "Activity Streams" with the additional complications of privacy and anonymity.

The organizers of SoME are not a large influential Silicon Valley corporation, but a small group of computer-adept academics. This project, thankfully, is free from the anonymity/privacy complications that Mozilla faced. In the similarities, I also see some of the potential for the down sides of the approach. I've often noticed that the distillation technique of the collected data didn't actually optimize for what the researchers intended or hoped.

I have observed there are some points of confusion for the reviewers in SoME on how to use the voting system. The entries span a full gamut of elementary school to near doctoral level dissertations. Not all reviewers can understand the more lofty entries, while the rudimentary entries could be disinteresting to the more advanced reviewers. The organization's requested judgement categories are somewhat vague and open to wide interpretation. None of those criteria included "I like one better than the other". I am monitoring several of the social media sites where reviewers are discussing the judging process. Some reviewers embrace the like/dislike judgements with seemly little regard for the criteria. I'm hoping that rinses out in the final analysis and so doesn't matter.

I learned something from

every entry that I judged,

even the ones I disliked

or left me feeling

unqualified.

"Like" is a personal thing tightly bound to a person's inherent and likely, unconscious, biases. The organizers are aware of the those problems, and acknowledge that they themselves have biases, such as preferring one media type over another. Would this particular self selected group of academic content creators have a predictable common set of biases? Is the voting system algorithm going to amplify bias and lead to a skewed result? I'm not sure how to detect that, let alone correct for such an outcome.

The entrants appear to be a very diverse group. I believe diversity is an asset, that is a triumph right there. If we, as a group, have a common set of biases, and the voting system reflects that fact, then the voting system found common ground. Not all will be good, not all will be bad.

The software in use is not untested, it's been used for peer review several times for entries in hackathons. It has been shown to have worked adequately in such venues in the past. This data set, however, appears to be nearly two orders of magnitude larger that previous uses. I'm sure the organizers, if not directly involved in those prior uses, have at least considered implications of scaling and applicability to this use scenario. This is an experiment and would be great subject for an entry in a future iteration of this very contest.

On Saturday, I dedicated time to add twenty data points to their system. That meant watching/reading twenty-one entries. I had difficulty consistently applying the prescribed criteria. I often found myself quickly judging in my mind a like or dislike, but by force of will, applied the criteria. I used a spreadsheet, marking each category with 0 to 5. Final judgement was the sum. A higher sum of the former or the latter determined how I voted. There were entries that I watched or read that were clearly beyond my education level. So I do not know how well I judged them on, for example, novelty. Did I knock somebody down a point or two in clarity because I didn't have the right educational foundation? I also noticed that my points system became less generous as I saw more videos. This is likely why the software developer of Gavel said, "Judging by assigning explicit numerical scores is inherently flawed."

I can say, however, I learned something from every single entry that I judged, even the ones I disliked or left me feeling unqualified. Even with the project still in the midst of the judging phase, I call it a success and well worth my participation. The idea was to be educational, not polished, not perfect, not universally liked. I commend the organizers for the effort and I'm eager to provide feedback to improve any future iterations of this Summer of Math Exposition.

As for my own entry, I'm don't believe I have any chance of winning. I'm just happy to have participated. I have a lot of experience giving conference presentations. This contest entry gave me the opportunity to see if I can shift that skill that into video presentation. It's a tough thing to do. I did this video in one take because I didn't want to have to edit. Video editing is a skill that I do not yet have, and I'm not inclined to learn. I resorted to leaning on a friend for editing assistance.

Many thanks to Ulf Logan for his efforts using DaVinci Resolve to put some polish on my video.